Automatic Code Generation

Clean Go code from DynamoDB JSON schemas without external dependencies — generating typed structs, constants, and query builders

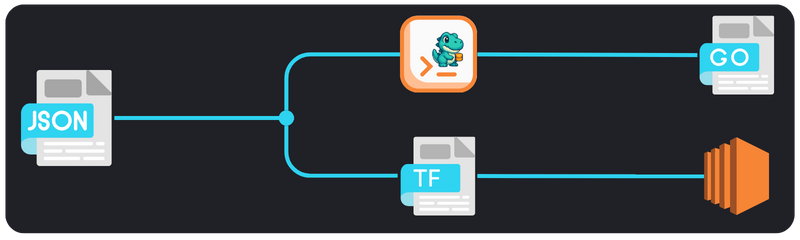

A CLI tool that transforms declarative DynamoDB JSON schemas into production-ready Go modules with rich functionality for interacting with the database. With a single godyno gen command, you get fully-typed packages that include validation, query builders, helper functions, and all the infrastructure needed for reliable integration with AWS DynamoDB — eliminating repetitive boilerplate code.

# generate GoLang code from JSON-schema

$ godyno -c schema.json -d ./gen # -mode all

# or

$ godyno -c schema.json -d ./gen -mode min

# result - new file:

# ./gen/basemixed.go

# create DynamoDB table from JSON-schema (terraform)

$ export TF_VAR_schema=$(cat schema.json)

$ terraform apply{

"table_name": "base-mixed",

"hash_key": "pk",

"range_key": "sk",

"attributes": [

{ "name": "pk", "type": "S" },

{ "name": "sk", "type": "S" }

],

"common_attributes": [

{ "name": "name", "type": "S" },

{ "name": "count", "type": "N" },

{ "name": "is_active", "type": "BOOL" },

{ "name": "tags", "type": "SS" },

{ "name": "scores", "type": "NS" }

],

"secondary_indexes": []

}package basemixed

import (

"context"

"fmt"

"reflect"

"sort"

"strconv"

"strings"

"github.com/aws/aws-lambda-go/events"

"github.com/aws/aws-sdk-go-v2/aws"

"github.com/aws/aws-sdk-go-v2/feature/dynamodb/attributevalue"

"github.com/aws/aws-sdk-go-v2/feature/dynamodb/expression"

"github.com/aws/aws-sdk-go-v2/service/dynamodb"

"github.com/aws/aws-sdk-go-v2/service/dynamodb/types"

)

const (

// TableName is the DynamoDB table name for all operations.

TableName = "base-mixed"

// ColumnPk is the "pk" attribute name.

ColumnPk = "pk"

// ColumnSk is the "sk" attribute name.

ColumnSk = "sk"

// ColumnName is the "name" attribute name.

ColumnName = "name"

// ColumnCount is the "count" attribute name.

ColumnCount = "count"

// ColumnIsActive is the "is_active" attribute name.

ColumnIsActive = "is_active"

// ColumnTags is the "tags" attribute name.

ColumnTags = "tags"

// ColumnScores is the "scores" attribute name.

ColumnScores = "scores"

)

var (

// AttributeNames contains all table attribute names for projection expressions.

// Example: expression.NamesList(expression.Name(AttributeNames[0]))

AttributeNames = []string{

"pk",

"sk",

"name",

"count",

"is_active",

"tags",

"scores",

}

// KeyAttributeNames contains primary key attributes for key operations.

// Example: validateKeys(item, KeyAttributeNames)

KeyAttributeNames = []string{

"pk",

"sk",

}

)

// OperatorType defines the type of operation for queries and filters.

// Provides type-safe operator constants for DynamoDB expressions.

type OperatorType string

const (

// Equality and comparison operators - work with all comparable types

EQ OperatorType = "=" // Equal to

NE OperatorType = "<>" // Not equal to

GT OperatorType = ">" // Greater than

LT OperatorType = "<" // Less than

GTE OperatorType = ">=" // Greater than or equal

LTE OperatorType = "<=" // Less than or equal

// Range operator for between comparisons

BETWEEN OperatorType = "BETWEEN" // Between two values (inclusive)

// String operators - work with String types and Sets

CONTAINS OperatorType = "contains" // Contains substring or set member

NOT_CONTAINS OperatorType = "not_contains" // Does not contain substring or member

BEGINS_WITH OperatorType = "begins_with" // String starts with prefix

// Set operators for scalar values only (not DynamoDB Sets SS/NS)

IN OperatorType = "IN" // Value is in list of values

NOT_IN OperatorType = "NOT_IN" // Value is not in list of values

// Existence operators - work with all types

EXISTS OperatorType = "attribute_exists" // Attribute exists

NOT_EXISTS OperatorType = "attribute_not_exists" // Attribute does not exist

)

// ConditionType defines whether this is a key condition or filter condition.

// Key conditions are used in Query operations, filters in both Query and Scan.

type ConditionType string

const (

KeyCondition ConditionType = "KEY" // For partition/sort key conditions

FilterCondition ConditionType = "FILTER" // For non-key attribute filtering

)

// Condition represents a single query or filter condition with validation metadata.

type Condition struct {

Field string // Attribute name

Operator OperatorType // Operation type

Values []any // Operation values

Type ConditionType // Key or filter condition

}

// Type-safe handler functions for different expression types.

// Provides compile-time safety for DynamoDB expression building.

type (

KeyOperatorHandler func(expression.KeyBuilder, []any) expression.KeyConditionBuilder

ConditionOperatorHandler func(expression.NameBuilder, []any) expression.ConditionBuilder

)

// keyOperatorHandlers provides O(1) lookup for key condition operations.

// Only includes operators valid for key conditions (partition/sort keys).

var keyOperatorHandlers = map[OperatorType]KeyOperatorHandler{

EQ: func(field expression.KeyBuilder, values []any) expression.KeyConditionBuilder {

return field.Equal(expression.Value(values[0]))

},

GT: func(field expression.KeyBuilder, values []any) expression.KeyConditionBuilder {

return field.GreaterThan(expression.Value(values[0]))

},

LT: func(field expression.KeyBuilder, values []any) expression.KeyConditionBuilder {

return field.LessThan(expression.Value(values[0]))

},

GTE: func(field expression.KeyBuilder, values []any) expression.KeyConditionBuilder {

return field.GreaterThanEqual(expression.Value(values[0]))

},

LTE: func(field expression.KeyBuilder, values []any) expression.KeyConditionBuilder {

return field.LessThanEqual(expression.Value(values[0]))

},

BETWEEN: func(field expression.KeyBuilder, values []any) expression.KeyConditionBuilder {

return field.Between(expression.Value(values[0]), expression.Value(values[1]))

},

}

// allowedKeyConditionOperators defines operators valid for key conditions.

// Single source of truth for key condition validation.

var allowedKeyConditionOperators = map[OperatorType]bool{

EQ: true,

GT: true,

LT: true,

GTE: true,

LTE: true,

BETWEEN: true,

}

// conditionOperatorHandlers provides O(1) lookup for filter operations.

// Includes all operators supported in filter expressions.

var conditionOperatorHandlers = map[OperatorType]ConditionOperatorHandler{

// Basic comparison operators

EQ: func(field expression.NameBuilder, values []any) expression.ConditionBuilder {

return field.Equal(expression.Value(values[0]))

},

NE: func(field expression.NameBuilder, values []any) expression.ConditionBuilder {

return field.NotEqual(expression.Value(values[0]))

},

GT: func(field expression.NameBuilder, values []any) expression.ConditionBuilder {

return field.GreaterThan(expression.Value(values[0]))

},

LT: func(field expression.NameBuilder, values []any) expression.ConditionBuilder {

return field.LessThan(expression.Value(values[0]))

},

GTE: func(field expression.NameBuilder, values []any) expression.ConditionBuilder {

return field.GreaterThanEqual(expression.Value(values[0]))

},

LTE: func(field expression.NameBuilder, values []any) expression.ConditionBuilder {

return field.LessThanEqual(expression.Value(values[0]))

},

BETWEEN: func(field expression.NameBuilder, values []any) expression.ConditionBuilder {

return field.Between(expression.Value(values[0]), expression.Value(values[1]))

},

// String and set operations

CONTAINS: func(field expression.NameBuilder, values []any) expression.ConditionBuilder {

return field.Contains(fmt.Sprintf("%v", values[0]))

},

NOT_CONTAINS: func(field expression.NameBuilder, values []any) expression.ConditionBuilder {

return expression.Not(field.Contains(fmt.Sprintf("%v", values[0])))

},

BEGINS_WITH: func(field expression.NameBuilder, values []any) expression.ConditionBuilder {

return field.BeginsWith(fmt.Sprintf("%v", values[0]))

},

// Scalar value list operations (not for DynamoDB Sets)

IN: func(field expression.NameBuilder, values []any) expression.ConditionBuilder {

if len(values) == 0 {

return expression.AttributeNotExists(field)

}

if len(values) == 1 {

return field.Equal(expression.Value(values[0]))

}

operands := make([]expression.OperandBuilder, len(values))

for i, v := range values {

operands[i] = expression.Value(v)

}

return field.In(operands[0], operands[1:]...)

},

NOT_IN: func(field expression.NameBuilder, values []any) expression.ConditionBuilder {

if len(values) == 0 {

return expression.AttributeExists(field)

}

if len(values) == 1 {

return field.NotEqual(expression.Value(values[0]))

}

operands := make([]expression.OperandBuilder, len(values))

for i, v := range values {

operands[i] = expression.Value(v)

}

return expression.Not(field.In(operands[0], operands[1:]...))

},

// Existence checks

EXISTS: func(field expression.NameBuilder, values []any) expression.ConditionBuilder {

return expression.AttributeExists(field)

},

NOT_EXISTS: func(field expression.NameBuilder, values []any) expression.ConditionBuilder {

return expression.AttributeNotExists(field)

},

}

// ValidateValues checks if the number of values is correct for the operator.

// Prevents runtime errors by validating value count at build time.

func ValidateValues(op OperatorType, values []any) bool {

switch op {

case EQ, NE, GT, LT, GTE, LTE, CONTAINS, NOT_CONTAINS, BEGINS_WITH:

return len(values) == 1 // Single value operators

case BETWEEN:

return len(values) == 2 // Start and end values

case IN, NOT_IN:

return len(values) >= 1 // At least one value required

case EXISTS, NOT_EXISTS:

return len(values) == 0 // No values needed

default:

return false

}

}

// IsKeyConditionOperator checks if operator can be used in key conditions.

// Key conditions have stricter rules than filter conditions.

func IsKeyConditionOperator(op OperatorType) bool {

return allowedKeyConditionOperators[op]

}

// ValidateOperator checks if operator is valid for the given field using schema.

// Provides type-safe operator validation based on DynamoDB field types.

func ValidateOperator(fieldName string, op OperatorType) bool {

if fi, ok := TableSchema.FieldsMap[fieldName]; ok {

return fi.SupportsOperator(op)

}

return false

}

// BuildConditionExpression converts operator to DynamoDB filter expression.

// Creates type-safe filter conditions with full validation.

// Example: BuildConditionExpression("name", EQ, []any{"John"})

func BuildConditionExpression(field string, op OperatorType, values []any) (expression.ConditionBuilder, error) {

fieldInfo, exists := TableSchema.FieldsMap[field]

if !exists {

return expression.ConditionBuilder{}, fmt.Errorf("field %s not found in schema", field)

}

if !fieldInfo.SupportsOperator(op) {

return expression.ConditionBuilder{}, fmt.Errorf("operator %s not supported for field %s (type %s)", op, field, fieldInfo.DynamoType)

}

if !ValidateValues(op, values) {

return expression.ConditionBuilder{}, fmt.Errorf("invalid number of values for operator %s", op)

}

handler := conditionOperatorHandlers[op]

fieldExpr := expression.Name(field)

result := handler(fieldExpr, values)

return result, nil

}

// BuildKeyConditionExpression converts operator to DynamoDB key condition.

// Creates type-safe key conditions for Query operations only.

// Example: BuildKeyConditionExpression("user_id", EQ, []any{"123"})

func BuildKeyConditionExpression(field string, op OperatorType, values []any) (expression.KeyConditionBuilder, error) {

fieldInfo, exists := TableSchema.FieldsMap[field]

if !exists {

return expression.KeyConditionBuilder{}, fmt.Errorf("field %s not found in schema", field)

}

if !fieldInfo.IsKey {

return expression.KeyConditionBuilder{}, fmt.Errorf("field %s is not a key field", field)

}

if !fieldInfo.SupportsOperator(op) {

return expression.KeyConditionBuilder{}, fmt.Errorf("operator %s not supported for field %s (type %s)", op, field, fieldInfo.DynamoType)

}

if !ValidateValues(op, values) {

return expression.KeyConditionBuilder{}, fmt.Errorf("invalid number of values for operator %s", op)

}

handler := keyOperatorHandlers[op]

fieldExpr := expression.Key(field)

result := handler(fieldExpr, values)

return result, nil

}

// FieldInfo contains metadata about a schema field with operator validation.

// Provides O(1) lookup for supported DynamoDB operations per field type.

type FieldInfo struct {

DynamoType string

IsKey bool

IsHashKey bool

IsRangeKey bool

AllowedOperators map[OperatorType]bool

}

// SupportsOperator checks if this field supports the given operator.

// Returns false for invalid operator/type combinations.

// Example: stringField.SupportsOperator(BEGINS_WITH) -> true

func (fi FieldInfo) SupportsOperator(op OperatorType) bool {

return fi.AllowedOperators[op]

}

// buildAllowedOperators returns the set of allowed operators for a DynamoDB type.

// Implements DynamoDB operator compatibility rules for each data type.

func buildAllowedOperators(dynamoType string) map[OperatorType]bool {

allowed := make(map[OperatorType]bool)

switch dynamoType {

case "S": // String - supports all comparison and string operations

allowed[EQ] = true

allowed[NE] = true

allowed[GT] = true

allowed[LT] = true

allowed[GTE] = true

allowed[LTE] = true

allowed[BETWEEN] = true

allowed[CONTAINS] = true

allowed[NOT_CONTAINS] = true

allowed[BEGINS_WITH] = true

allowed[IN] = true

allowed[NOT_IN] = true

allowed[EXISTS] = true

allowed[NOT_EXISTS] = true

case "N": // Number - supports comparison operations, no string functions

allowed[EQ] = true

allowed[NE] = true

allowed[GT] = true

allowed[LT] = true

allowed[GTE] = true

allowed[LTE] = true

allowed[BETWEEN] = true

allowed[IN] = true

allowed[NOT_IN] = true

allowed[EXISTS] = true

allowed[NOT_EXISTS] = true

case "BOOL": // Boolean - only equality and existence checks

allowed[EQ] = true

allowed[NE] = true

allowed[EXISTS] = true

allowed[NOT_EXISTS] = true

case "SS": // String Set - membership operations only, not IN/NOT_IN

allowed[CONTAINS] = true

allowed[NOT_CONTAINS] = true

allowed[EXISTS] = true

allowed[NOT_EXISTS] = true

case "NS": // Number Set - membership operations only, not IN/NOT_IN

allowed[CONTAINS] = true

allowed[NOT_CONTAINS] = true

allowed[EXISTS] = true

allowed[NOT_EXISTS] = true

case "BS": // Binary Set - membership operations only

allowed[CONTAINS] = true

allowed[NOT_CONTAINS] = true

allowed[EXISTS] = true

allowed[NOT_EXISTS] = true

case "L": // List - only existence checks

allowed[EXISTS] = true

allowed[NOT_EXISTS] = true

case "M": // Map - only existence checks

allowed[EXISTS] = true

allowed[NOT_EXISTS] = true

case "NULL": // Null - only existence checks

allowed[EXISTS] = true

allowed[NOT_EXISTS] = true

default:

// Unknown types - basic operations only

allowed[EQ] = true

allowed[NE] = true

allowed[EXISTS] = true

allowed[NOT_EXISTS] = true

}

return allowed

}

// DynamoSchema represents the complete table schema with indexes and metadata.

type DynamoSchema struct {

TableName string

HashKey string

RangeKey string

Attributes []Attribute

CommonAttributes []Attribute

SecondaryIndexes []SecondaryIndex

FieldsMap map[string]FieldInfo

}

// Attribute represents a DynamoDB table attribute with its type.

type Attribute struct {

Name string // Attribute name

Type string // DynamoDB type (S, N, BOOL, SS, NS, etc.)

}

// CompositeKeyPart represents a part of a composite key structure.

// Used for complex key patterns in GSI/LSI definitions.

type CompositeKeyPart struct {

IsConstant bool // true if this part is a constant value

Value string // the constant value or attribute name

}

// SecondaryIndex represents a GSI or LSI with optional composite keys.

// Supports both simple and composite key structures for advanced access patterns.

type SecondaryIndex struct {

Name string

HashKey string

RangeKey string

ProjectionType string

HashKeyParts []CompositeKeyPart // for composite hash keys

RangeKeyParts []CompositeKeyPart // for composite range keys

NonKeyAttributes []string // projected attributes for INCLUDE

}

// SchemaItem represents a single DynamoDB item with all table attributes.

// All fields are properly tagged for AWS SDK marshaling/unmarshaling.

type SchemaItem struct {

Pk string `dynamodbav:"pk"`

Sk string `dynamodbav:"sk"`

Name string `dynamodbav:"name"`

Count int `dynamodbav:"count"`

IsActive bool `dynamodbav:"is_active"`

Tags []string `dynamodbav:"tags,stringset"`

Scores []int `dynamodbav:"scores,numberset"`

}

// TableSchema contains the complete schema definition with pre-computed metadata.

// Used throughout the generated code for validation and operator checking.

var TableSchema = DynamoSchema{

TableName: "base-mixed",

HashKey: "pk",

RangeKey: "sk",

Attributes: []Attribute{

{Name: "pk", Type: "S"},

{Name: "sk", Type: "S"},

},

CommonAttributes: []Attribute{

{Name: "name", Type: "S"},

{Name: "count", Type: "N"},

{Name: "is_active", Type: "BOOL"},

{Name: "tags", Type: "SS"},

{Name: "scores", Type: "NS"},

},

SecondaryIndexes: []SecondaryIndex{},

FieldsMap: map[string]FieldInfo{

"pk": {

DynamoType: "S",

IsKey: true,

IsHashKey: true,

IsRangeKey: false,

AllowedOperators: buildAllowedOperators("S"),

},

"sk": {

DynamoType: "S",

IsKey: true,

IsHashKey: false,

IsRangeKey: true,

AllowedOperators: buildAllowedOperators("S"),

},

"name": {

DynamoType: "S",

IsKey: false,

IsHashKey: false,

IsRangeKey: false,

AllowedOperators: buildAllowedOperators("S"),

},

"count": {

DynamoType: "N",

IsKey: false,

IsHashKey: false,

IsRangeKey: false,

AllowedOperators: buildAllowedOperators("N"),

},

"is_active": {

DynamoType: "BOOL",

IsKey: false,

IsHashKey: false,

IsRangeKey: false,

AllowedOperators: buildAllowedOperators("BOOL"),

},

"tags": {

DynamoType: "SS",

IsKey: false,

IsHashKey: false,

IsRangeKey: false,

AllowedOperators: buildAllowedOperators("SS"),

},

"scores": {

DynamoType: "NS",

IsKey: false,

IsHashKey: false,

IsRangeKey: false,

AllowedOperators: buildAllowedOperators("NS"),

},

},

}

// FilterMixin provides common filtering logic for Query and Scan operations.

// Supports all DynamoDB filter operators with type validation.

type FilterMixin struct {

FilterConditions []expression.ConditionBuilder

UsedKeys map[string]bool

Attributes map[string]any

}

// NewFilterMixin creates a new FilterMixin instance with initialized maps.

func NewFilterMixin() FilterMixin {

return FilterMixin{

FilterConditions: make([]expression.ConditionBuilder, 0),

UsedKeys: make(map[string]bool),

Attributes: make(map[string]any),

}

}

// Filter adds a filter condition using the universal operator system.

// Validates operator compatibility and value types before adding.

func (fm *FilterMixin) Filter(field string, op OperatorType, values ...any) {

if !ValidateValues(op, values) {

return

}

if !ValidateOperator(field, op) {

return

}

filterCond, err := BuildConditionExpression(field, op, values)

if err != nil {

return

}

fm.FilterConditions = append(fm.FilterConditions, filterCond)

fm.UsedKeys[field] = true

if op == EQ && len(values) == 1 {

fm.Attributes[field] = values[0]

}

}

// PaginationMixin provides pagination support for Query and Scan operations.

type PaginationMixin struct {

LimitValue *int

ExclusiveStartKey map[string]types.AttributeValue

}

// NewPaginationMixin creates a new PaginationMixin instance.

func NewPaginationMixin() PaginationMixin {

return PaginationMixin{}

}

// Limit sets the maximum number of items to return in one request.

// Example: .Limit(25)

func (pm *PaginationMixin) Limit(limit int) {

pm.LimitValue = &limit

}

// StartFrom sets the exclusive start key for pagination.

// Use LastEvaluatedKey from previous response for next page.

// Example: .StartFrom(previousResponse.LastEvaluatedKey)

func (pm *PaginationMixin) StartFrom(lastEvaluatedKey map[string]types.AttributeValue) {

pm.ExclusiveStartKey = lastEvaluatedKey

}

// KeyConditionMixin provides key condition logic for Query operations only.

// Supports partition key and sort key conditions with automatic index selection.

type KeyConditionMixin struct {

KeyConditions map[string]expression.KeyConditionBuilder

SortDescending bool

PreferredSortKey string

}

// NewKeyConditionMixin creates a new KeyConditionMixin instance.

func NewKeyConditionMixin() KeyConditionMixin {

return KeyConditionMixin{

KeyConditions: make(map[string]expression.KeyConditionBuilder),

}

}

// With adds a key condition using the universal operator system.

// Only valid for partition and sort key attributes.

func (kcm *KeyConditionMixin) With(field string, op OperatorType, values ...any) {

if !ValidateValues(op, values) {

return

}

fieldInfo, exists := TableSchema.FieldsMap[field]

if !exists {

return

}

if !fieldInfo.IsKey {

return

}

if !ValidateOperator(field, op) {

return

}

keyCond, err := BuildKeyConditionExpression(field, op, values)

if err != nil {

return

}

kcm.KeyConditions[field] = keyCond

}

// WithPreferredSortKey sets preferred sort key for index selection.

// Useful when multiple indexes match the query pattern.

func (kcm *KeyConditionMixin) WithPreferredSortKey(key string) {

kcm.PreferredSortKey = key

}

// OrderByDesc sets descending sort order for results.

// Only affects sort key ordering, not filter results.

func (kcm *KeyConditionMixin) OrderByDesc() {

kcm.SortDescending = true

}

// OrderByAsc sets ascending sort order for results (default).

func (kcm *KeyConditionMixin) OrderByAsc() {

kcm.SortDescending = false

}

// CONVENIENCE METHODS - Only available in ALL mode

// FilterEQ adds equality filter condition.

// Example: .FilterEQ("status", "active")

func (fm *FilterMixin) FilterEQ(field string, value any) {

fm.Filter(field, EQ, value)

}

// FilterContains adds contains filter for strings or sets.

// Example: .FilterContains("tags", "important")

func (fm *FilterMixin) FilterContains(field string, value any) {

fm.Filter(field, CONTAINS, value)

}

// FilterNotContains adds not contains filter for strings or sets.

func (fm *FilterMixin) FilterNotContains(field string, value any) {

fm.Filter(field, NOT_CONTAINS, value)

}

// FilterBeginsWith adds begins_with filter for strings.

// Example: .FilterBeginsWith("email", "admin@")

func (fm *FilterMixin) FilterBeginsWith(field string, value any) {

fm.Filter(field, BEGINS_WITH, value)

}

// FilterBetween adds range filter for comparable values.

// Example: .FilterBetween("price", 10, 100)

func (fm *FilterMixin) FilterBetween(field string, start, end any) {

fm.Filter(field, BETWEEN, start, end)

}

// FilterGT adds greater than filter.

func (fm *FilterMixin) FilterGT(field string, value any) {

fm.Filter(field, GT, value)

}

// FilterLT adds less than filter.

func (fm *FilterMixin) FilterLT(field string, value any) {

fm.Filter(field, LT, value)

}

// FilterGTE adds greater than or equal filter.

func (fm *FilterMixin) FilterGTE(field string, value any) {

fm.Filter(field, GTE, value)

}

// FilterLTE adds less than or equal filter.

func (fm *FilterMixin) FilterLTE(field string, value any) {

fm.Filter(field, LTE, value)

}

// FilterExists checks if attribute exists.

// Example: .FilterExists("optional_field")

func (fm *FilterMixin) FilterExists(field string) {

fm.Filter(field, EXISTS)

}

// FilterNotExists checks if attribute does not exist.

func (fm *FilterMixin) FilterNotExists(field string) {

fm.Filter(field, NOT_EXISTS)

}

// FilterNE adds not equal filter.

func (fm *FilterMixin) FilterNE(field string, value any) {

fm.Filter(field, NE, value)

}

// FilterIn adds IN filter for scalar values.

// For DynamoDB Sets (SS/NS), use FilterContains instead.

// Example: .FilterIn("category", "books", "electronics")

func (fm *FilterMixin) FilterIn(field string, values ...any) {

if len(values) == 0 {

return

}

fm.Filter(field, IN, values...)

}

// FilterNotIn adds NOT_IN filter for scalar values.

// For DynamoDB Sets (SS/NS), use FilterNotContains instead.

func (fm *FilterMixin) FilterNotIn(field string, values ...any) {

if len(values) == 0 {

return

}

fm.Filter(field, NOT_IN, values...)

}

// CONVENIENCE METHODS - Only available in ALL mode

// WithEQ adds equality key condition.

// Required for partition key, optional for sort key.

// Example: .WithEQ("user_id", "123")

func (kcm *KeyConditionMixin) WithEQ(field string, value any) {

kcm.With(field, EQ, value)

}

// WithBetween adds range key condition for sort keys.

// Example: .WithBetween("created_at", start_time, end_time)

func (kcm *KeyConditionMixin) WithBetween(field string, start, end any) {

kcm.With(field, BETWEEN, start, end)

}

// WithGT adds greater than key condition for sort keys.

func (kcm *KeyConditionMixin) WithGT(field string, value any) {

kcm.With(field, GT, value)

}

// WithGTE adds greater than or equal key condition for sort keys.

func (kcm *KeyConditionMixin) WithGTE(field string, value any) {

kcm.With(field, GTE, value)

}

// WithLT adds less than key condition for sort keys.

func (kcm *KeyConditionMixin) WithLT(field string, value any) {

kcm.With(field, LT, value)

}

// WithLTE adds less than or equal key condition for sort keys.

func (kcm *KeyConditionMixin) WithLTE(field string, value any) {

kcm.With(field, LTE, value)

}

// QueryBuilder provides a fluent interface for building type-safe DynamoDB queries.

// Combines FilterMixin, PaginationMixin, and KeyConditionMixin for comprehensive query building.

// Supports automatic index selection, composite keys, and all DynamoDB query patterns.

type QueryBuilder struct {

FilterMixin // Filter conditions for any table attribute

PaginationMixin // Limit and pagination support

KeyConditionMixin // Key conditions for partition and sort keys

IndexName string // Optional index name override

}

// NewQueryBuilder creates a new QueryBuilder instance with initialized mixins.

// All mixins are properly initialized for immediate use.

// Example: query := NewQueryBuilder().WithEQ("user_id", "123").FilterEQ("status", "active")

func NewQueryBuilder() *QueryBuilder {

return &QueryBuilder{

FilterMixin: NewFilterMixin(),

PaginationMixin: NewPaginationMixin(),

KeyConditionMixin: NewKeyConditionMixin(),

}

}

// Limit sets the maximum number of items and returns QueryBuilder for method chaining.

// Controls the number of items returned in a single request.

// Example: query.Limit(25)

func (qb *QueryBuilder) Limit(limit int) *QueryBuilder {

qb.PaginationMixin.Limit(limit)

return qb

}

// StartFrom sets the exclusive start key and returns QueryBuilder for method chaining.

// Use LastEvaluatedKey from previous response for pagination.

// Example: query.StartFrom(previousResponse.LastEvaluatedKey)

func (qb *QueryBuilder) StartFrom(lastEvaluatedKey map[string]types.AttributeValue) *QueryBuilder {

qb.PaginationMixin.StartFrom(lastEvaluatedKey)

return qb

}

// OrderByDesc sets descending sort order and returns QueryBuilder for method chaining.

// Only affects sort key ordering, not filter results.

// Example: query.OrderByDesc() // newest first

func (qb *QueryBuilder) OrderByDesc() *QueryBuilder {

qb.KeyConditionMixin.OrderByDesc()

return qb

}

// OrderByAsc sets ascending sort order and returns QueryBuilder for method chaining.

// This is the default sort order.

// Example: query.OrderByAsc() // oldest first

func (qb *QueryBuilder) OrderByAsc() *QueryBuilder {

qb.KeyConditionMixin.OrderByAsc()

return qb

}

// WithPreferredSortKey sets the preferred sort key and returns QueryBuilder for method chaining.

// Hints the index selection algorithm when multiple indexes could satisfy the query.

// Example: query.WithPreferredSortKey("created_at")

func (qb *QueryBuilder) WithPreferredSortKey(key string) *QueryBuilder {

qb.KeyConditionMixin.WithPreferredSortKey(key)

return qb

}

// HELPER METHODS for universal index access

// getIndexByName finds index by name in schema metadata.

func (qb *QueryBuilder) getIndexByName(indexName string) *SecondaryIndex {

for i := range TableSchema.SecondaryIndexes {

if TableSchema.SecondaryIndexes[i].Name == indexName {

return &TableSchema.SecondaryIndexes[i]

}

}

return nil

}

// getNonConstantParts returns only non-constant parts of composite key.

func (qb *QueryBuilder) getNonConstantParts(parts []CompositeKeyPart) []CompositeKeyPart {

var result []CompositeKeyPart

for _, part := range parts {

if !part.IsConstant {

result = append(result, part)

}

}

return result

}

// setCompositeKey builds and sets composite key from parts and values.

func (qb *QueryBuilder) setCompositeKey(keyName string, parts []CompositeKeyPart, values []any) {

nonConstantParts := qb.getNonConstantParts(parts)

for i, part := range nonConstantParts {

if i < len(values) {

qb.Attributes[part.Value] = values[i]

qb.UsedKeys[part.Value] = true

}

}

compositeValue := qb.buildCompositeKeyValue(parts)

qb.Attributes[keyName] = compositeValue

qb.UsedKeys[keyName] = true

qb.KeyConditions[keyName] = expression.Key(keyName).Equal(expression.Value(compositeValue))

}

// SCHEMA INTROSPECTION METHODS

// GetIndexNames returns all available index names.

func GetIndexNames() []string {

names := make([]string, len(TableSchema.SecondaryIndexes))

for i, index := range TableSchema.SecondaryIndexes {

names[i] = index.Name

}

return names

}

// GetIndexInfo returns detailed information about an index.

func GetIndexInfo(indexName string) *IndexInfo {

for _, index := range TableSchema.SecondaryIndexes {

if index.Name == indexName {

return &IndexInfo{

Name: index.Name,

Type: getIndexType(index),

HashKey: index.HashKey,

RangeKey: index.RangeKey,

IsHashComposite: len(index.HashKeyParts) > 0,

IsRangeComposite: len(index.RangeKeyParts) > 0,

HashKeyParts: countNonConstantParts(index.HashKeyParts),

RangeKeyParts: countNonConstantParts(index.RangeKeyParts),

ProjectionType: index.ProjectionType,

}

}

}

return nil

}

// IndexInfo provides metadata about a table index.

type IndexInfo struct {

Name string

Type string

HashKey string

RangeKey string

IsHashComposite bool

IsRangeComposite bool

HashKeyParts int

RangeKeyParts int

ProjectionType string

}

// getIndexType returns human-readable index type.

func getIndexType(index SecondaryIndex) string {

if index.HashKey == "" {

return "LSI"

}

return "GSI"

}

// countNonConstantParts counts non-constant parts in composite key.

func countNonConstantParts(parts []CompositeKeyPart) int {

count := 0

for _, part := range parts {

if !part.IsConstant {

count++

}

}

return count

}

// With adds key condition and returns QueryBuilder for method chaining.

// Only works with partition and sort key attributes for efficient querying.

// Example: query.With("user_id", EQ, "123").With("created_at", GT, timestamp)

func (qb *QueryBuilder) With(field string, op OperatorType, values ...any) *QueryBuilder {

qb.KeyConditionMixin.With(field, op, values...)

if op == EQ && len(values) == 1 {

qb.Attributes[field] = values[0]

qb.UsedKeys[field] = true

}

return qb

}

// Filter adds a filter condition and returns QueryBuilder for method chaining.

// Wraps FilterMixin.Filter with fluent interface support.

func (qb *QueryBuilder) Filter(field string, op OperatorType, values ...any) *QueryBuilder {

qb.FilterMixin.Filter(field, op, values...)

return qb

}

// CONVENIENCE METHODS - Only available in ALL mode

// WithEQ adds equality key condition and returns QueryBuilder for method chaining.

// Required for partition keys, commonly used for sort keys.

// Example: query.WithEQ("user_id", "123")

func (qb *QueryBuilder) WithEQ(field string, value any) *QueryBuilder {

qb.KeyConditionMixin.WithEQ(field, value)

qb.Attributes[field] = value

qb.UsedKeys[field] = true

return qb

}

// WithBetween adds range key condition and returns QueryBuilder for method chaining.

// Only valid for sort keys, not partition keys.

// Example: query.WithBetween("timestamp", startTime, endTime)

func (qb *QueryBuilder) WithBetween(field string, start, end any) *QueryBuilder {

qb.KeyConditionMixin.WithBetween(field, start, end)

qb.Attributes[field+"_start"] = start

qb.Attributes[field+"_end"] = end

qb.UsedKeys[field] = true

return qb

}

// WithGT adds greater than key condition and returns QueryBuilder for method chaining.

// Only valid for sort keys in range queries.

// Example: query.WithGT("created_at", yesterday)

func (qb *QueryBuilder) WithGT(field string, value any) *QueryBuilder {

qb.KeyConditionMixin.WithGT(field, value)

qb.Attributes[field] = value

qb.UsedKeys[field] = true

return qb

}

// WithGTE adds greater than or equal key condition and returns QueryBuilder for method chaining.

// Only valid for sort keys in range queries.

// Example: query.WithGTE("score", minimumScore)

func (qb *QueryBuilder) WithGTE(field string, value any) *QueryBuilder {

qb.KeyConditionMixin.WithGTE(field, value)

qb.Attributes[field] = value

qb.UsedKeys[field] = true

return qb

}

// WithLT adds less than key condition and returns QueryBuilder for method chaining.

// Only valid for sort keys in range queries.

// Example: query.WithLT("expiry_date", now)

func (qb *QueryBuilder) WithLT(field string, value any) *QueryBuilder {

qb.KeyConditionMixin.WithLT(field, value)

qb.Attributes[field] = value

qb.UsedKeys[field] = true

return qb

}

// WithLTE adds less than or equal key condition and returns QueryBuilder for method chaining.

// Only valid for sort keys in range queries.

// Example: query.WithLTE("price", maxBudget)

func (qb *QueryBuilder) WithLTE(field string, value any) *QueryBuilder {

qb.KeyConditionMixin.WithLTE(field, value)

qb.Attributes[field] = value

qb.UsedKeys[field] = true

return qb

}

// WithIndexHashKey sets hash key for any index by name.

// Automatically handles both simple and composite keys based on schema metadata.

// For composite keys, pass values in the order they appear in the schema.

// Example: query.WithIndexHashKey("user-status-index", "user123")

// Example: query.WithIndexHashKey("tenant-user-index", "tenant1", "user123") // composite

func (qb *QueryBuilder) WithIndexHashKey(indexName string, values ...any) *QueryBuilder {

index := qb.getIndexByName(indexName)

if index == nil {

return qb

}

if index.HashKeyParts != nil {

nonConstantParts := qb.getNonConstantParts(index.HashKeyParts)

if len(values) != len(nonConstantParts) {

return qb

}

qb.setCompositeKey(index.HashKey, index.HashKeyParts, values)

} else {

if len(values) != 1 {

return qb

}

qb.Attributes[index.HashKey] = values[0]

qb.UsedKeys[index.HashKey] = true

qb.KeyConditions[index.HashKey] = expression.Key(index.HashKey).Equal(expression.Value(values[0]))

}

return qb

}

// WithIndexRangeKey sets range key for any index by name.

// Automatically handles both simple and composite keys based on schema metadata.

// For composite keys, pass values in the order they appear in the schema.

// Example: query.WithIndexRangeKey("user-status-index", "active")

// Example: query.WithIndexRangeKey("date-type-index", "2023-01-01", "ORDER") // composite

func (qb *QueryBuilder) WithIndexRangeKey(indexName string, values ...any) *QueryBuilder {

index := qb.getIndexByName(indexName)

if index == nil || index.RangeKey == "" {

return qb

}

if index.RangeKeyParts != nil {

nonConstantParts := qb.getNonConstantParts(index.RangeKeyParts)

if len(values) != len(nonConstantParts) {

return qb

}

qb.setCompositeKey(index.RangeKey, index.RangeKeyParts, values)

} else {

if len(values) != 1 {

return qb

}

qb.Attributes[index.RangeKey] = values[0]

qb.UsedKeys[index.RangeKey] = true

qb.KeyConditions[index.RangeKey] = expression.Key(index.RangeKey).Equal(expression.Value(values[0]))

}

return qb

}

// WithIndexRangeKeyBetween sets range key condition for any index with BETWEEN operator.

// Only works with simple range keys, not composite ones.

// Example: query.WithIndexRangeKeyBetween("date-index", startDate, endDate)

func (qb *QueryBuilder) WithIndexRangeKeyBetween(indexName string, start, end any) *QueryBuilder {

index := qb.getIndexByName(indexName)

if index == nil || index.RangeKey == "" || index.RangeKeyParts != nil {

return qb

}

qb.KeyConditions[index.RangeKey] = expression.Key(index.RangeKey).Between(expression.Value(start), expression.Value(end))

qb.UsedKeys[index.RangeKey] = true

qb.Attributes[index.RangeKey+"_start"] = start

qb.Attributes[index.RangeKey+"_end"] = end

return qb

}

// WithIndexRangeKeyGT sets range key condition for any index with GT operator.

// Only works with simple range keys, not composite ones.

// Example: query.WithIndexRangeKeyGT("score-index", 100)

func (qb *QueryBuilder) WithIndexRangeKeyGT(indexName string, value any) *QueryBuilder {

index := qb.getIndexByName(indexName)

if index == nil || index.RangeKey == "" || index.RangeKeyParts != nil {

return qb

}

qb.KeyConditions[index.RangeKey] = expression.Key(index.RangeKey).GreaterThan(expression.Value(value))

qb.UsedKeys[index.RangeKey] = true

qb.Attributes[index.RangeKey] = value

return qb

}

// WithIndexRangeKeyLT sets range key condition for any index with LT operator.

// Only works with simple range keys, not composite ones.

// Example: query.WithIndexRangeKeyLT("timestamp-index", cutoffTime)

func (qb *QueryBuilder) WithIndexRangeKeyLT(indexName string, value any) *QueryBuilder {

index := qb.getIndexByName(indexName)

if index == nil || index.RangeKey == "" || index.RangeKeyParts != nil {

return qb

}

qb.KeyConditions[index.RangeKey] = expression.Key(index.RangeKey).LessThan(expression.Value(value))

qb.UsedKeys[index.RangeKey] = true

qb.Attributes[index.RangeKey] = value

return qb

}

// WithIndexRangeKeyGTE sets range key condition for any index with GTE operator.

// Only works with simple range keys, not composite ones.

// Example: query.WithIndexRangeKeyGTE("score-index", 100)

func (qb *QueryBuilder) WithIndexRangeKeyGTE(indexName string, value any) *QueryBuilder {

index := qb.getIndexByName(indexName)

if index == nil || index.RangeKey == "" || index.RangeKeyParts != nil {

return qb

}

qb.KeyConditions[index.RangeKey] = expression.Key(index.RangeKey).GreaterThanEqual(expression.Value(value))

qb.UsedKeys[index.RangeKey] = true

qb.Attributes[index.RangeKey] = value

return qb

}

// WithIndexRangeKeyLTE sets range key condition for any index with LTE operator.

// Only works with simple range keys, not composite ones.

// Example: query.WithIndexRangeKeyLTE("timestamp-index", cutoffTime)

func (qb *QueryBuilder) WithIndexRangeKeyLTE(indexName string, value any) *QueryBuilder {

index := qb.getIndexByName(indexName)

if index == nil || index.RangeKey == "" || index.RangeKeyParts != nil {

return qb

}

qb.KeyConditions[index.RangeKey] = expression.Key(index.RangeKey).LessThanEqual(expression.Value(value))

qb.UsedKeys[index.RangeKey] = true

qb.Attributes[index.RangeKey] = value

return qb

}

// CONVENIENCE METHODS - Only available in ALL mode

// FilterEQ adds equality filter and returns QueryBuilder for method chaining.

// Example: query.FilterEQ("status", "active")

func (qb *QueryBuilder) FilterEQ(field string, value any) *QueryBuilder {

qb.FilterMixin.FilterEQ(field, value)

return qb

}

// FilterContains adds contains filter and returns QueryBuilder for method chaining.

// Works with String attributes (substring) and Set attributes (membership).

// Example: query.FilterContains("tags", "premium")

func (qb *QueryBuilder) FilterContains(field string, value any) *QueryBuilder {

qb.FilterMixin.FilterContains(field, value)

return qb

}

// FilterNotContains adds not contains filter and returns QueryBuilder for method chaining.

// Opposite of FilterContains for exclusion filtering.

func (qb *QueryBuilder) FilterNotContains(field string, value any) *QueryBuilder {

qb.FilterMixin.FilterNotContains(field, value)

return qb

}

// FilterBeginsWith adds begins_with filter and returns QueryBuilder for method chaining.

// Only works with String attributes for prefix matching.

// Example: query.FilterBeginsWith("email", "admin@")

func (qb *QueryBuilder) FilterBeginsWith(field string, value any) *QueryBuilder {

qb.FilterMixin.FilterBeginsWith(field, value)

return qb

}

// FilterBetween adds range filter and returns QueryBuilder for method chaining.

// Works with comparable types for inclusive range filtering.

// Example: query.FilterBetween("score", 80, 100)

func (qb *QueryBuilder) FilterBetween(field string, start, end any) *QueryBuilder {

qb.FilterMixin.FilterBetween(field, start, end)

return qb

}

// FilterGT adds greater than filter and returns QueryBuilder for method chaining.

// Example: query.FilterGT("last_login", cutoffDate)

func (qb *QueryBuilder) FilterGT(field string, value any) *QueryBuilder {

qb.FilterMixin.FilterGT(field, value)

return qb

}

// FilterLT adds less than filter and returns QueryBuilder for method chaining.

// Example: query.FilterLT("attempts", maxAttempts)

func (qb *QueryBuilder) FilterLT(field string, value any) *QueryBuilder {

qb.FilterMixin.FilterLT(field, value)

return qb

}

// FilterGTE adds greater than or equal filter and returns QueryBuilder for method chaining.

// Example: query.FilterGTE("age", minimumAge)

func (qb *QueryBuilder) FilterGTE(field string, value any) *QueryBuilder {

qb.FilterMixin.FilterGTE(field, value)

return qb

}

// FilterLTE adds less than or equal filter and returns QueryBuilder for method chaining.

// Example: query.FilterLTE("file_size", maxFileSize)

func (qb *QueryBuilder) FilterLTE(field string, value any) *QueryBuilder {

qb.FilterMixin.FilterLTE(field, value)

return qb

}

// FilterExists adds attribute exists filter and returns QueryBuilder for method chaining.

// Checks if the specified attribute exists in the item.

// Example: query.FilterExists("optional_field")

func (qb *QueryBuilder) FilterExists(field string) *QueryBuilder {

qb.FilterMixin.FilterExists(field)

return qb

}

// FilterNotExists adds attribute not exists filter and returns QueryBuilder for method chaining.

// Checks if the specified attribute does not exist in the item.

func (qb *QueryBuilder) FilterNotExists(field string) *QueryBuilder {

qb.FilterMixin.FilterNotExists(field)

return qb

}

// FilterNE adds not equal filter and returns QueryBuilder for method chaining.

// Example: query.FilterNE("status", "deleted")

func (qb *QueryBuilder) FilterNE(field string, value any) *QueryBuilder {

qb.FilterMixin.FilterNE(field, value)

return qb

}

// FilterIn adds IN filter and returns QueryBuilder for method chaining.

// For scalar values only - use FilterContains for DynamoDB Sets.

// Example: query.FilterIn("category", "books", "electronics", "clothing")

func (qb *QueryBuilder) FilterIn(field string, values ...any) *QueryBuilder {

qb.FilterMixin.FilterIn(field, values...)

return qb

}

// FilterNotIn adds NOT_IN filter and returns QueryBuilder for method chaining.

// For scalar values only - use FilterNotContains for DynamoDB Sets.

func (qb *QueryBuilder) FilterNotIn(field string, values ...any) *QueryBuilder {

qb.FilterMixin.FilterNotIn(field, values...)

return qb

}

// Build analyzes the query conditions and selects the optimal index for execution.

// Implements smart index selection algorithm considering:

// - Preferred sort key hints from user

// - Number of composite key parts matched

// - Index efficiency for the given query pattern

// Returns index name, key conditions, filter conditions, pagination key, and any errors.

func (qb *QueryBuilder) Build() (string, expression.KeyConditionBuilder, *expression.ConditionBuilder, map[string]types.AttributeValue, error) {

var filterCond *expression.ConditionBuilder

sortedIndexes := make([]SecondaryIndex, len(TableSchema.SecondaryIndexes))

copy(sortedIndexes, TableSchema.SecondaryIndexes)

sort.Slice(sortedIndexes, func(i, j int) bool {

if qb.PreferredSortKey != "" {

iMatches := sortedIndexes[i].RangeKey == qb.PreferredSortKey

jMatches := sortedIndexes[j].RangeKey == qb.PreferredSortKey

if iMatches && !jMatches {

return true

}

if !iMatches && jMatches {

return false

}

}

iParts := qb.calculateIndexParts(sortedIndexes[i])

jParts := qb.calculateIndexParts(sortedIndexes[j])

return iParts > jParts

})

for _, idx := range sortedIndexes {

hashKeyCondition, hashKeyMatch := qb.buildHashKeyCondition(idx)

if !hashKeyMatch {

continue

}

rangeKeyCondition, rangeKeyMatch := qb.buildRangeKeyCondition(idx)

if !rangeKeyMatch {

continue

}

keyCondition := *hashKeyCondition

if rangeKeyCondition != nil {

keyCondition = keyCondition.And(*rangeKeyCondition)

}

filterCond = qb.buildFilterCondition(idx)

return idx.Name, keyCondition, filterCond, qb.ExclusiveStartKey, nil

}

if qb.UsedKeys[TableSchema.HashKey] {

indexName := ""

keyCondition := expression.Key(TableSchema.HashKey).Equal(expression.Value(qb.Attributes[TableSchema.HashKey]))

if TableSchema.RangeKey != "" && qb.UsedKeys[TableSchema.RangeKey] {

if cond, exists := qb.KeyConditions[TableSchema.RangeKey]; exists {

keyCondition = keyCondition.And(cond)

} else {

keyCondition = keyCondition.And(expression.Key(TableSchema.RangeKey).Equal(expression.Value(qb.Attributes[TableSchema.RangeKey])))

}

}

var filterConditions []expression.ConditionBuilder

filterConditions = append(filterConditions, qb.FilterConditions...)

for attrName, value := range qb.Attributes {

if attrName != TableSchema.HashKey && attrName != TableSchema.RangeKey {

filterConditions = append(filterConditions, expression.Name(attrName).Equal(expression.Value(value)))

}

}

if len(filterConditions) > 0 {

combinedFilter := filterConditions[0]

for _, cond := range filterConditions[1:] {

combinedFilter = combinedFilter.And(cond)

}

filterCond = &combinedFilter

}

return indexName, keyCondition, filterCond, qb.ExclusiveStartKey, nil

}

return "", expression.KeyConditionBuilder{}, nil, nil, fmt.Errorf("no suitable index found for the provided keys")

}

// calculateIndexParts counts the number of composite key parts in an index.

// Used for index selection priority - more specific indexes are preferred.

func (qb *QueryBuilder) calculateIndexParts(idx SecondaryIndex) int {

parts := 0

if idx.HashKeyParts != nil {

parts += len(idx.HashKeyParts)

}

if idx.RangeKeyParts != nil {

parts += len(idx.RangeKeyParts)

}

return parts

}

// buildHashKeyCondition creates the hash key condition for a given index.

// Supports both simple hash keys and composite hash keys.

// Returns the condition and whether the index hash key can be satisfied.

func (qb *QueryBuilder) buildHashKeyCondition(idx SecondaryIndex) (*expression.KeyConditionBuilder, bool) {

if idx.HashKeyParts != nil {

if qb.hasAllKeys(idx.HashKeyParts) {

cond := qb.buildCompositeKeyCondition(idx.HashKeyParts)

return &cond, true

}

} else if idx.HashKey != "" && qb.UsedKeys[idx.HashKey] {

cond := expression.Key(idx.HashKey).Equal(expression.Value(qb.Attributes[idx.HashKey]))

return &cond, true

}

return nil, false

}

// buildRangeKeyCondition creates the range key condition for a given index.

// Supports both simple range keys and composite range keys.

// Range keys are optional - returns true if no range key is defined.

func (qb *QueryBuilder) buildRangeKeyCondition(idx SecondaryIndex) (*expression.KeyConditionBuilder, bool) {

if idx.RangeKeyParts != nil {

if qb.hasAllKeys(idx.RangeKeyParts) {

cond := qb.buildCompositeKeyCondition(idx.RangeKeyParts)

return &cond, true

}

} else if idx.RangeKey != "" {

if qb.UsedKeys[idx.RangeKey] {

if cond, exists := qb.KeyConditions[idx.RangeKey]; exists {

return &cond, true

} else {

cond := expression.Key(idx.RangeKey).Equal(expression.Value(qb.Attributes[idx.RangeKey]))

return &cond, true

}

} else {

return nil, true

}

} else {

return nil, true

}

return nil, false

}

// buildFilterCondition creates filter conditions for attributes not part of the index keys.

// Moves non-key conditions to filter expressions for optimal query performance.

func (qb *QueryBuilder) buildFilterCondition(idx SecondaryIndex) *expression.ConditionBuilder {

var filterConditions []expression.ConditionBuilder

filterConditions = append(filterConditions, qb.FilterConditions...)

for attrName, value := range qb.Attributes {

if qb.isPartOfIndexKey(attrName, idx) {

continue

}

filterConditions = append(filterConditions, expression.Name(attrName).Equal(expression.Value(value)))

}

if len(filterConditions) == 0 {

return nil

}

combinedFilter := filterConditions[0]

for _, cond := range filterConditions[1:] {

combinedFilter = combinedFilter.And(cond)

}

return &combinedFilter

}

// isPartOfIndexKey checks if an attribute is part of the index's key structure.

// Used to determine whether conditions should be key conditions or filter conditions.

func (qb *QueryBuilder) isPartOfIndexKey(attrName string, idx SecondaryIndex) bool {

if idx.HashKeyParts != nil {

for _, part := range idx.HashKeyParts {

if !part.IsConstant && part.Value == attrName {

return true

}

}

} else if attrName == idx.HashKey {

return true

}

if idx.RangeKeyParts != nil {

for _, part := range idx.RangeKeyParts {

if !part.IsConstant && part.Value == attrName {

return true

}

}

} else if attrName == idx.RangeKey {

return true

}

return false

}

// BuildQuery constructs the final DynamoDB QueryInput with all expressions and parameters.

// Combines key conditions, filter conditions, pagination, and sorting options.

// Example: input, err := queryBuilder.BuildQuery()

func (qb *QueryBuilder) BuildQuery() (*dynamodb.QueryInput, error) {

indexName, keyCond, filterCond, exclusiveStartKey, err := qb.Build()

if err != nil {

return nil, err

}

exprBuilder := expression.NewBuilder().WithKeyCondition(keyCond)

if filterCond != nil {

exprBuilder = exprBuilder.WithFilter(*filterCond)

}

expr, err := exprBuilder.Build()

if err != nil {

return nil, fmt.Errorf("failed to build expression: %v", err)

}

input := &dynamodb.QueryInput{

TableName: aws.String(TableName),

KeyConditionExpression: expr.KeyCondition(),

ExpressionAttributeNames: expr.Names(),

ExpressionAttributeValues: expr.Values(),

ScanIndexForward: aws.Bool(!qb.SortDescending),

}

if indexName != "" {

input.IndexName = aws.String(indexName)

}

if filterCond != nil {

input.FilterExpression = expr.Filter()

}

if qb.LimitValue != nil {

input.Limit = aws.Int32(int32(*qb.LimitValue))

}

if exclusiveStartKey != nil {

input.ExclusiveStartKey = exclusiveStartKey

}

return input, nil

}

// Execute runs the query against DynamoDB and returns strongly-typed results.

// Handles the complete query lifecycle: build input, execute, unmarshal results.

// Example: items, err := queryBuilder.Execute(ctx, dynamoClient)

func (qb *QueryBuilder) Execute(ctx context.Context, client *dynamodb.Client) ([]SchemaItem, error) {

input, err := qb.BuildQuery()

if err != nil {

return nil, err

}

result, err := client.Query(ctx, input)

if err != nil {

return nil, fmt.Errorf("failed to execute query: %v", err)

}

var items []SchemaItem

err = attributevalue.UnmarshalListOfMaps(result.Items, &items)

if err != nil {

return nil, fmt.Errorf("failed to unmarshal result: %v", err)

}

return items, nil

}

// hasAllKeys checks if all non-constant parts of a composite key are available.

// Used to determine if a composite key can be fully constructed from current conditions.

// Constants are always available, variables must be present in UsedKeys.

func (qb *QueryBuilder) hasAllKeys(parts []CompositeKeyPart) bool {

for _, part := range parts {

if !part.IsConstant && !qb.UsedKeys[part.Value] {

return false

}

}

return true

}

// buildCompositeKeyCondition creates a key condition for composite keys.

// Combines multiple key parts into a single equality condition using "#" separator.

// Used internally by the index selection algorithm for complex key structures.

func (qb *QueryBuilder) buildCompositeKeyCondition(parts []CompositeKeyPart) expression.KeyConditionBuilder {

compositeKeyName := qb.getCompositeKeyName(parts)

compositeValue := qb.buildCompositeKeyValue(parts)

return expression.Key(compositeKeyName).Equal(expression.Value(compositeValue))

}

// getCompositeKeyName generates the attribute name for a composite key.

// For single parts, returns the part name directly.

// For multiple parts, joins them with "#" separator for DynamoDB storage.

// Example: ["user", "tenant"] -> "user#tenant"

func (qb *QueryBuilder) getCompositeKeyName(parts []CompositeKeyPart) string {

switch len(parts) {

case 0:

return ""

case 1:

return parts[0].Value

default:

names := make([]string, len(parts))

for i, part := range parts {

names[i] = part.Value

}

return strings.Join(names, "#")

}

}

// buildCompositeKeyValue constructs the actual value for a composite key.

// Combines constant values and variable values from query attributes.

// Uses "#" separator to create a single string value for DynamoDB.

// Example: constant "USER" + variable "123" -> "USER#123"

func (qb *QueryBuilder) buildCompositeKeyValue(parts []CompositeKeyPart) string {

if len(parts) == 0 {

return ""

}

values := make([]string, len(parts))

for i, part := range parts {

if part.IsConstant {

values[i] = part.Value

} else {

values[i] = qb.formatAttributeValue(qb.Attributes[part.Value])

}

}

return strings.Join(values, "#")

}

// formatAttributeValue converts any Go value to its string representation for composite keys.

// Provides optimized fast paths for common types (string, bool) and proper handling

// of complex types through AWS SDK marshaling. Ensures consistent string formatting

// for reliable composite key construction.

func (qb *QueryBuilder) formatAttributeValue(value interface{}) string {

if value == nil {

return ""

}

switch v := value.(type) {

case string:

return v

case bool:

if v {

return "true"

}

return "false"

}

av, err := attributevalue.Marshal(value)

if err != nil {

return fmt.Sprintf("%v", value)

}

switch typed := av.(type) {

case *types.AttributeValueMemberS:

return typed.Value

case *types.AttributeValueMemberN:

return typed.Value

case *types.AttributeValueMemberBOOL:

if typed.Value {

return "true"

}

return "false"

case *types.AttributeValueMemberSS:

return strings.Join(typed.Value, ",")

case *types.AttributeValueMemberNS:

return strings.Join(typed.Value, ",")

default:

return fmt.Sprintf("%v", value)

}

}

// ScanBuilder provides a fluent interface for building DynamoDB scan operations.

// Scans read every item in a table or index, applying filters after data is read.

// Use Query for efficient key-based access; use Scan for full table analysis.

// Combines FilterMixin and PaginationMixin for comprehensive scan functionality.

type ScanBuilder struct {

FilterMixin // Filter conditions applied after reading items

PaginationMixin // Limit and pagination support

IndexName string // Optional secondary index to scan

ProjectionAttributes []string // Specific attributes to return

ParallelScanConfig *ParallelScanConfig // Parallel scan configuration

}

// ParallelScanConfig configures parallel scan operations for improved throughput.

// Divides the table into segments that can be scanned concurrently.

// Each worker scans one segment, reducing overall scan time for large tables.

type ParallelScanConfig struct {

TotalSegments int // Total number of segments to divide the table into

Segment int // Which segment this scan worker should process (0-based)

}

// NewScanBuilder creates a new ScanBuilder instance with initialized mixins.

// All mixins are properly initialized for immediate use.

// Example: scan := NewScanBuilder().FilterEQ("status", "active").Limit(100)

func NewScanBuilder() *ScanBuilder {

return &ScanBuilder{

FilterMixin: NewFilterMixin(),

PaginationMixin: NewPaginationMixin(),

}

}

// Limit sets the maximum number of items and returns ScanBuilder for method chaining.

// Controls the number of items returned in a single scan request.

// Note: DynamoDB may return fewer items due to size limits even with this setting.

// Example: scan.Limit(100)

func (sb *ScanBuilder) Limit(limit int) *ScanBuilder {

sb.PaginationMixin.Limit(limit)

return sb

}

// StartFrom sets the exclusive start key and returns ScanBuilder for method chaining.

// Use LastEvaluatedKey from previous response for pagination.

// Example: scan.StartFrom(previousResponse.LastEvaluatedKey)

func (sb *ScanBuilder) StartFrom(lastEvaluatedKey map[string]types.AttributeValue) *ScanBuilder {

sb.PaginationMixin.StartFrom(lastEvaluatedKey)

return sb

}

// WithIndex sets the index name for scanning a secondary index.

// Allows scanning GSI or LSI instead of the main table.

// Index must exist and be in ACTIVE state.

// Example: scan.WithIndex("status-index")

func (sb *ScanBuilder) WithIndex(indexName string) *ScanBuilder {

sb.IndexName = indexName

return sb

}

// WithProjection sets the projection attributes to return specific fields only.

// Reduces network traffic and costs by returning only needed attributes.

// Pass attribute names that should be included in the response.

// Example: scan.WithProjection([]string{"id", "name", "status"})

func (sb *ScanBuilder) WithProjection(attributes []string) *ScanBuilder {

sb.ProjectionAttributes = attributes

return sb

}

// WithParallelScan configures parallel scan settings for improved throughput.

// Divides the table into segments for concurrent processing by multiple workers.

// totalSegments: how many segments to divide the table (typically number of workers)

// segment: which segment this worker processes (0-based, must be < totalSegments)

// Example: scan.WithParallelScan(4, 0) // Process segment 0 of 4 total segments

func (sb *ScanBuilder) WithParallelScan(totalSegments, segment int) *ScanBuilder {

sb.ParallelScanConfig = &ParallelScanConfig{

TotalSegments: totalSegments,

Segment: segment,

}

return sb

}

// Filter adds a filter condition and returns ScanBuilder for method chaining.

// Wraps FilterMixin.Filter with fluent interface support.

func (sb *ScanBuilder) Filter(field string, op OperatorType, values ...any) *ScanBuilder {

sb.FilterMixin.Filter(field, op, values...)

return sb

}

// CONVENIENCE METHODS - Only available in ALL mode

// FilterEQ adds equality filter and returns ScanBuilder for method chaining.

// Example: scan.FilterEQ("status", "active")

func (sb *ScanBuilder) FilterEQ(field string, value any) *ScanBuilder {

sb.FilterMixin.FilterEQ(field, value)

return sb

}

// FilterContains adds contains filter and returns ScanBuilder for method chaining.

// Works with String attributes (substring) and Set attributes (membership).

// Example: scan.FilterContains("tags", "premium")

func (sb *ScanBuilder) FilterContains(field string, value any) *ScanBuilder {

sb.FilterMixin.FilterContains(field, value)

return sb

}

// FilterNotContains adds not contains filter and returns ScanBuilder for method chaining.

// Opposite of FilterContains for exclusion filtering.

func (sb *ScanBuilder) FilterNotContains(field string, value any) *ScanBuilder {

sb.FilterMixin.FilterNotContains(field, value)

return sb

}

// FilterBeginsWith adds begins_with filter and returns ScanBuilder for method chaining.

// Only works with String attributes for prefix matching.

// Example: scan.FilterBeginsWith("email", "admin@")

func (sb *ScanBuilder) FilterBeginsWith(field string, value any) *ScanBuilder {

sb.FilterMixin.FilterBeginsWith(field, value)

return sb

}

// FilterBetween adds range filter and returns ScanBuilder for method chaining.

// Works with comparable types for inclusive range filtering.

// Example: scan.FilterBetween("score", 80, 100)

func (sb *ScanBuilder) FilterBetween(field string, start, end any) *ScanBuilder {

sb.FilterMixin.FilterBetween(field, start, end)

return sb

}

// FilterGT adds greater than filter and returns ScanBuilder for method chaining.

// Example: scan.FilterGT("last_login", cutoffDate)

func (sb *ScanBuilder) FilterGT(field string, value any) *ScanBuilder {

sb.FilterMixin.FilterGT(field, value)

return sb

}

// FilterLT adds less than filter and returns ScanBuilder for method chaining.

// Example: scan.FilterLT("attempts", maxAttempts)

func (sb *ScanBuilder) FilterLT(field string, value any) *ScanBuilder {

sb.FilterMixin.FilterLT(field, value)

return sb

}

// FilterGTE adds greater than or equal filter and returns ScanBuilder for method chaining.

// Example: scan.FilterGTE("age", minimumAge)

func (sb *ScanBuilder) FilterGTE(field string, value any) *ScanBuilder {

sb.FilterMixin.FilterGTE(field, value)

return sb

}

// FilterLTE adds less than or equal filter and returns ScanBuilder for method chaining.

// Example: scan.FilterLTE("file_size", maxFileSize)

func (sb *ScanBuilder) FilterLTE(field string, value any) *ScanBuilder {

sb.FilterMixin.FilterLTE(field, value)

return sb

}

// FilterExists adds attribute exists filter and returns ScanBuilder for method chaining.

// Checks if the specified attribute exists in the item.

// Example: scan.FilterExists("optional_field")

func (sb *ScanBuilder) FilterExists(field string) *ScanBuilder {

sb.FilterMixin.FilterExists(field)

return sb

}

// FilterNotExists adds attribute not exists filter and returns ScanBuilder for method chaining.

// Checks if the specified attribute does not exist in the item.

func (sb *ScanBuilder) FilterNotExists(field string) *ScanBuilder {

sb.FilterMixin.FilterNotExists(field)

return sb

}

// FilterNE adds not equal filter and returns ScanBuilder for method chaining.

// Example: scan.FilterNE("status", "deleted")

func (sb *ScanBuilder) FilterNE(field string, value any) *ScanBuilder {

sb.FilterMixin.FilterNE(field, value)

return sb

}

// FilterIn adds IN filter and returns ScanBuilder for method chaining.

// For scalar values only - use FilterContains for DynamoDB Sets.

// Example: scan.FilterIn("category", "books", "electronics", "clothing")

func (sb *ScanBuilder) FilterIn(field string, values ...any) *ScanBuilder {

sb.FilterMixin.FilterIn(field, values...)

return sb

}

// FilterNotIn adds NOT_IN filter and returns ScanBuilder for method chaining.

// For scalar values only - use FilterNotContains for DynamoDB Sets.

func (sb *ScanBuilder) FilterNotIn(field string, values ...any) *ScanBuilder {

sb.FilterMixin.FilterNotIn(field, values...)

return sb

}

// BuildScan constructs the final DynamoDB ScanInput with all configured options.

// Combines filter conditions, projection attributes, pagination, and parallel scan settings.

// Handles expression building and attribute mapping automatically.

// Example: input, err := scanBuilder.BuildScan()

func (sb *ScanBuilder) BuildScan() (*dynamodb.ScanInput, error) {

input := &dynamodb.ScanInput{

TableName: aws.String(TableName),

}

if sb.IndexName != "" {

input.IndexName = aws.String(sb.IndexName)

}

var exprBuilder expression.Builder

hasExpression := false

if len(sb.FilterConditions) > 0 {

combinedFilter := sb.FilterConditions[0]

for _, condition := range sb.FilterConditions[1:] {

combinedFilter = combinedFilter.And(condition)

}

exprBuilder = exprBuilder.WithFilter(combinedFilter)

hasExpression = true

}

if len(sb.ProjectionAttributes) > 0 {

var projectionBuilder expression.ProjectionBuilder

for i, attr := range sb.ProjectionAttributes {

if i == 0 {

projectionBuilder = expression.NamesList(expression.Name(attr))

} else {

projectionBuilder = projectionBuilder.AddNames(expression.Name(attr))

}

}

exprBuilder = exprBuilder.WithProjection(projectionBuilder)

hasExpression = true

}

if hasExpression {

expr, err := exprBuilder.Build()

if err != nil {

return nil, fmt.Errorf("failed to build scan expression: %v", err)

}

if len(sb.FilterConditions) > 0 {

input.FilterExpression = expr.Filter()

}

if len(sb.ProjectionAttributes) > 0 {

input.ProjectionExpression = expr.Projection()

}

if expr.Names() != nil {

input.ExpressionAttributeNames = expr.Names()

}

if expr.Values() != nil {

input.ExpressionAttributeValues = expr.Values()

}

}

if sb.LimitValue != nil {

input.Limit = aws.Int32(int32(*sb.LimitValue))

}

if sb.ExclusiveStartKey != nil {

input.ExclusiveStartKey = sb.ExclusiveStartKey

}

if sb.ParallelScanConfig != nil {

input.Segment = aws.Int32(int32(sb.ParallelScanConfig.Segment))

input.TotalSegments = aws.Int32(int32(sb.ParallelScanConfig.TotalSegments))

}

return input, nil

}

// Execute runs the scan against DynamoDB and returns strongly-typed results.

// Handles the complete scan lifecycle: build input, execute, unmarshal results.

// Returns all items that match the filter conditions as SchemaItem structs.

// Example: items, err := scanBuilder.Execute(ctx, dynamoClient)

func (sb *ScanBuilder) Execute(ctx context.Context, client *dynamodb.Client) ([]SchemaItem, error) {

input, err := sb.BuildScan()

if err != nil {

return nil, err

}

result, err := client.Scan(ctx, input)

if err != nil {

return nil, fmt.Errorf("failed to execute scan: %v", err)

}

var items []SchemaItem

err = attributevalue.UnmarshalListOfMaps(result.Items, &items)

if err != nil {

return nil, fmt.Errorf("failed to unmarshal scan result: %v", err)

}

return items, nil

}

// ItemInput converts a SchemaItem to DynamoDB AttributeValue map format.

// Uses AWS SDK's attributevalue package for safe and consistent marshaling.

// The resulting map can be used in PutItem, UpdateItem, and other DynamoDB operations.

// Example: attrMap, err := ItemInput(userItem)

func ItemInput(item SchemaItem) (map[string]types.AttributeValue, error) {

attributeValues, err := attributevalue.MarshalMap(item)

if err != nil {

return nil, fmt.Errorf("failed to marshal item: %v", err)

}

return attributeValues, nil

}

// ItemsInput converts a slice of SchemaItems to DynamoDB AttributeValue maps.

// Efficiently marshals multiple items for batch operations like BatchWriteItem.

// Maintains order and provides detailed error context for debugging failed marshaling.

// Example: attrMaps, err := ItemsInput([]SchemaItem{item1, item2, item3})

func ItemsInput(items []SchemaItem) ([]map[string]types.AttributeValue, error) {

result := make([]map[string]types.AttributeValue, 0, len(items))

for i, item := range items {

av, err := ItemInput(item)

if err != nil {

return nil, fmt.Errorf("failed to marshal item at index %d: %v", i, err)

}

result = append(result, av)

}

return result, nil

}

// UpdateItemInput creates an UpdateItemInput from a complete SchemaItem.

// Automatically extracts the key and updates all non-key attributes.

// Use when you want to update an entire item with new values.

// Example: input, err := UpdateItemInput(modifiedUserItem)

func UpdateItemInput(item SchemaItem) (*dynamodb.UpdateItemInput, error) {

key, err := KeyInput(item)

if err != nil {

return nil, fmt.Errorf("failed to create key from item for update: %v", err)

}

allAttributes, err := marshalItemToMap(item)

if err != nil {

return nil, fmt.Errorf("failed to marshal item for update: %v", err)

}

updates := extractNonKeyAttributes(allAttributes)

if len(updates) == 0 {

return nil, fmt.Errorf("no non-key attributes to update")

}

updateExpression, attrNames, attrValues := buildUpdateExpression(updates)

return &dynamodb.UpdateItemInput{

TableName: aws.String(TableSchema.TableName),

Key: key,

UpdateExpression: aws.String(updateExpression),

ExpressionAttributeNames: attrNames,

ExpressionAttributeValues: attrValues,

}, nil

}

// UpdateItemInputFromRaw creates an UpdateItemInput from raw key values and update map.

// More efficient for partial updates when you only want to modify specific attributes.

// Use when you know exactly which fields to update without loading the full item.

// Example: UpdateItemInputFromRaw("user123", nil, map[string]any{"status": "active", "last_login": time.Now()})

func UpdateItemInputFromRaw(hashKeyValue any, rangeKeyValue any, updates map[string]any) (*dynamodb.UpdateItemInput, error) {

if err := validateKeyInputs(hashKeyValue, rangeKeyValue); err != nil {

return nil, err

}

if err := validateUpdatesMap(updates); err != nil {

return nil, err

}

key, err := KeyInputFromRaw(hashKeyValue, rangeKeyValue)

if err != nil {

return nil, fmt.Errorf("failed to create key for update: %v", err)

}

marshaledUpdates, err := marshalUpdatesWithSchema(updates)

if err != nil {

return nil, fmt.Errorf("failed to marshal updates: %v", err)

}

updateExpression, attrNames, attrValues := buildUpdateExpression(marshaledUpdates)

return &dynamodb.UpdateItemInput{

TableName: aws.String(TableSchema.TableName),

Key: key,

UpdateExpression: aws.String(updateExpression),

ExpressionAttributeNames: attrNames,

ExpressionAttributeValues: attrValues,

}, nil

}

// UpdateItemInputWithCondition creates a conditional UpdateItemInput.

// Updates the item only if the condition expression evaluates to true.

// Enables optimistic locking and prevents race conditions in concurrent updates.

// Example: UpdateItemInputWithCondition("user123", nil, updates, "version = :v", nil, map[string]types.AttributeValue{":v": &types.AttributeValueMemberN{Value: "1"}})

func UpdateItemInputWithCondition(hashKeyValue any, rangeKeyValue any, updates map[string]any, conditionExpression string, conditionAttributeNames map[string]string, conditionAttributeValues map[string]types.AttributeValue) (*dynamodb.UpdateItemInput, error) {

if err := validateKeyInputs(hashKeyValue, rangeKeyValue); err != nil {

return nil, err

}

if err := validateUpdatesMap(updates); err != nil {

return nil, err

}

if err := validateConditionExpression(conditionExpression); err != nil {

return nil, err

}

updateInput, err := UpdateItemInputFromRaw(hashKeyValue, rangeKeyValue, updates)

if err != nil {

return nil, err

}

updateInput.ConditionExpression = aws.String(conditionExpression)

updateInput.ExpressionAttributeNames, updateInput.ExpressionAttributeValues = mergeExpressionAttributes(

updateInput.ExpressionAttributeNames,

updateInput.ExpressionAttributeValues,

conditionAttributeNames,